How Stephen Gould Scaled Its Capacity by 30% without Making a Single Hire

How can you bring about the best strategy for email marketing that creates thriving campaigns? It is often applying A/B testing, inviting more intelligent way of making decisions forward. Keep reading for more.

What is better: short or long copy? GIFs or static images? White or purple CTA buttons?

There are so many options and decisions that go into the making of the perfect email marketing strategy, bringing thriving CTOR and CTR to your campaigns. And the pressure is a lot since email campaigns are still considered one of their most important marketing channels, even before social media.

So how can you develop your email campaign strategy in a strategic way?

In this article, we answer this and a lot more to help you build intelligent A/B testing for your campaigns and be more effective in your results.

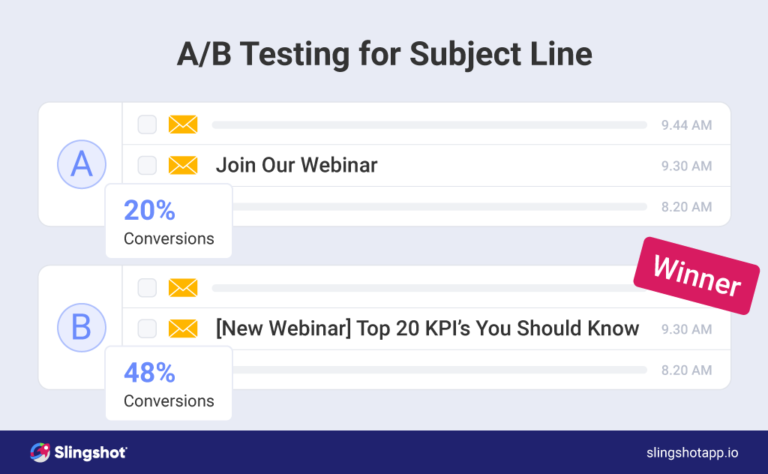

In an email marketing campaign, A/B testing is the method of sending two variations of the same email with a variable changed to see which version performs better. The A/B test can include changes in:

A/B testing (also called split testing) can help marketers gather the information that later gives the best results, generates more opens and click-throughs, and provides information about the audience’s preferences and email performance overall.

Marketers who employ email campaigns regularly turn to A/B testing as it is the only way to statistically prove which version of an email campaign performs best. It’s also a way to get to know an audience faster than usual – and optimize the strategy of your team accordingly.

“Email has an ability many channels don’t: creating valuable, personal touches—at scale.”

– David Newman, author of Do It! Marketing.

In other words: you need A/B testing to get the most out of your email marketing without blindly guessing. A/B split testing provides the necessary data to determine adjustments and strategies your email campaigns need. It provides the opportunity to learn and improve:

These are just a handful of “secrets” your A/B testing reveals, but there is so much more it can tell you, depending on what you are trying to measure. Finding what influences and drives more conversions sales – that’s what every marketer strives for and it’s the data coming from A/B testing that can help with that.

When you’re experimenting with your email campaigns, it’s essential to consider several things before starting to A/B test. Here are some rules you should follow in initiating your A/B testing.

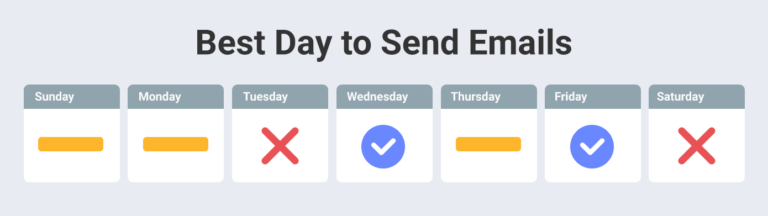

It’s not wise to test two versions of the same email in a longer time period. When you run an A/B mailing test, you should test simultaneously, otherwise, you won’t know if the differences in performance are due to the different variable in the email or an external factor you didn’t consider, like sending on a different day of the week or during a different month. The only exception is if you’re testing the optimal time for sending an email, in which case of course you should test your emails at a different time.

It’s important to give the A/B test enough time to statistically see the differences between the two variations you’re sending out. One study finds, for example, that wait times of 2 hours correctly predict the all-time winner more than 80% of the time, and 12+ hours are correct over 90% of the time. So let your test run long enough to see significant results.

You might want to test a few different things at the same time – but it’s best practice to choose one variable and measure the performance of your A/B tests according to it. That way you can be sure what exactly is responsible for the changes in the mail performance. There is something called multivariate testing which is another process of testing that can be explored on its own.

To have more conclusive results, test with similar/equal and at the same time random audiences – especially if you’re testing two or more audiences at the same time.

According to Hubspot, you should have an A/B send list of at least 1000 contacts to get statistically relevant results. If you have fewer than that, the proportion of your A/B testing list used to get statistically relevant results gets larger and larger.

No matter if you’re testing emails, websites, or landing pages, the best way to do it with the least effort is to employ an A/B testing tool like HubSpot or MailChimp. That way you can test and gather the data from your experiments easier.

It’s possible for A/B tests to impact different performance metrics at once, but it’s better to have a primary metric to focus on before you run your test. It’s called a “dependent” variable – the change you make that will later determine the results for user behavior. Choosing what metric is most important to you determines this variable and helps you set up your A/B testing in the best way.

So why should you start A/B testing immediately, if you’re not already?

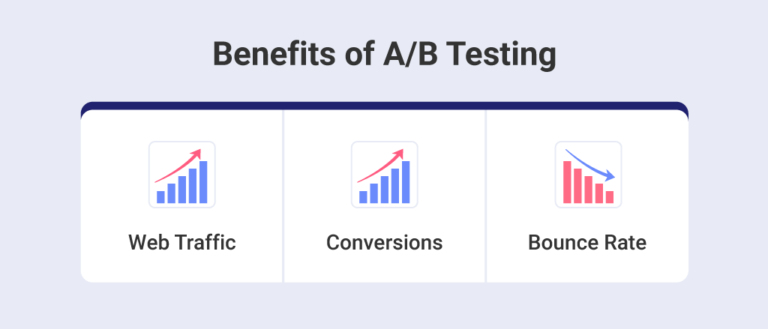

It’s worth it to master the craft of split testing and here are some of the benefits:

Getting people to your website, product, or landing page is the number one goal with email campaigns. Making a desired number of people click on the linked CTA button is what marketers hope for when they create their A/B tests and later look at the data.

The variable you change in your A/B email testing has one primary purpose and that is to increase the number of people who click and then fill out a form, converting it into a lead on your website. Increasing the conversion rate is one of the main benefits of split testing.

A/B testing can help with lowering the bounce rate for your website – by trying out a different copy, introductions, CTA buttons, layout, etc. It all has to do with getting to know your target customer better and learning the preferences of your audience through data, so you can better adjust your strategy.

You can’t do efficient A/B Testing without employing the right tools to get happy new customers.

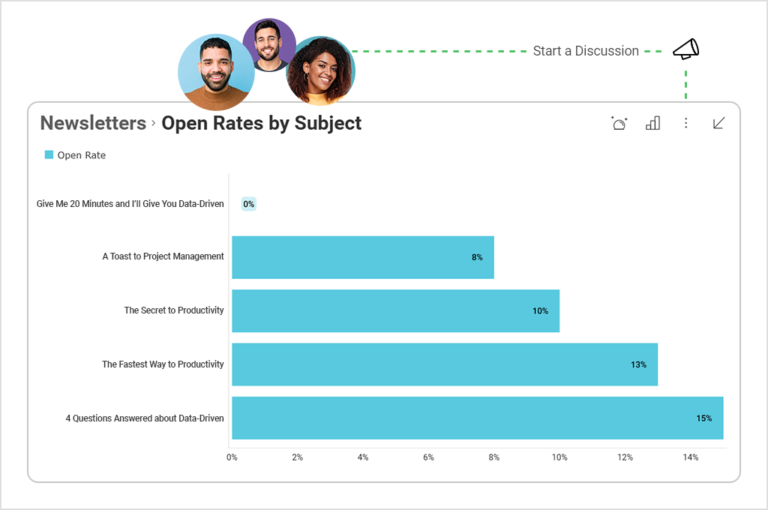

But whichever tools you choose, one thing is for sure – your A/B testing becomes meaningful only through the data insights that tell the story of what you have done so far – and what you should do next.

Testing and testing often is needed when you’re trying to get to know your customers, raise open and click-through rates and create more conversions. But it all comes down to the data you gather.

“What gets measured gets improved.”

– Peter Drucker

Using an all-in-one digital workplace with strong data analytics features is what your A/B testing needs, to bring forth the data-driven capabilities that can help you execute your email marketing strategy from start to finish.

Slingshot can help improve your results by letting you: